9. October 2018

Everyone remembers the painful data breaches in recent years such as Facebook/Cambridge Analytica, Uber, Equifax. The list [1] is long. These data breaches and the uncareful data management led to new personal data protection laws. Europe’s data protection regulations (GDPR) are active since the 25th of May 2018 and other countries and states followed soon thereafter such as California, with its Consumer Privacy Act which came into effect on the 28th of June. However, numerous companies still grapple with the challenge of effectively enhancing the value of anonymized data.

These data protection regulations require businesses to first ask for consent to collect personal data. Businesses are also expected to protect personal data and allow consumers to access, correct and even erase their personal data (“Right to be forgotten, Art. 17 GDPR”). Data breaches and other violations can lead to fines. For GDPR violations, the fines can be as high as 20 million EUR, or 4% of the worldwide annual revenue of the prior fiscal year, whichever is higher [2].

There are two obvious approaches to comply with these data protection regulations. The first one is to build and maintain a secure infrastructure. Such a system should ask for consent before collecting personal data. Additionally, the infrastructure has to allow users to access, correct and erase their data. The second approach is to remove all the personal identifiable information from the data to effectively anonymize it. This allows a business to use the information totally free and it does not need to grant users access to the data. This also completely removes the risk of leaking personal identifiable during data breaches. It seems that anonymizing data is the way to go if you compare it to building a secure control infrastructure. However, there is a drawback in anonymization.

The Quality of Anonymization

The huge amount of data collected in the last decades, allowed new and old companies to leverage machine learning techniques to build new products and revolutionize old products.

“Data is the new oil” is the new catchphrase that is posited in many economy/technology articles [3]. Recently the quality of collected data is gaining a lot of attention because it improves the quality and robustness of AI systems [4][5].

This is problematic for conventional anonymization methods since they usually operate by selectively destroying pixel information (masking, blurring, pixelization, etc.). Therefore, there is always a tradeoff between compliance and data quality. Compliance and data quality are two endpoints of a line where one cannot be improved without harming the other.

Instead of removing pixel information, our anonymization method changes and keeps it natural. We call it Deep Natural Anonymization (DNAT). DNAT detects faces and other identifiable, such as license plates, and generates an artificial replacement for each one of them. Each generated replacement is constrained to match the attributes of the source object as good as possible. Nevertheless, this constrain is selectively applied, so that we can control which attributes to maintain and which not. For faces, for example, it could be important to keep attributes like gender and age intact for further analytics. Identifiable aside, the rest of the information that does not contain sensitive personal data is kept without modifications. Thus, DNAT effectively breaks up the tradeoff between removing and anonymizing data.

Preserving Analytics

To measure the impact of anonymization approaches on the quality of the data, we sampled images from the Labeled Face in the Wild (LFW) [6] dataset. All images were taken from the test set. We compare four different anonymization tools which represent general groups of anonymization techniques [7][8][9][10]. We selected tools which are generally accessible to the public. Figure 1 shows a selection of these examples.

Fig.1: Comparison of anonymization techniques.

Structural Consistency

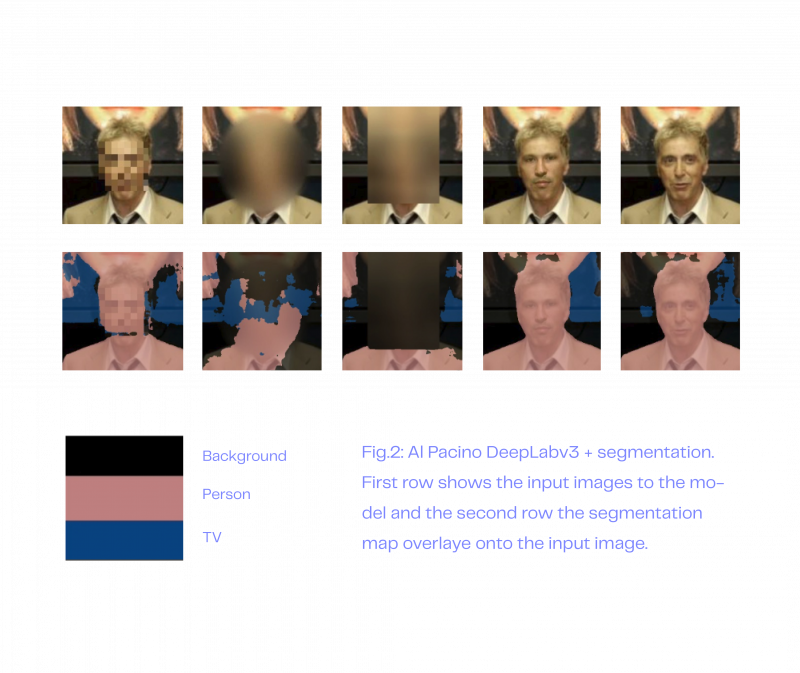

In a first step, we analyze how the overall structure of images changes after they have been processed by the anonymization techniques. For that purpose, we take a closer look at image segmentation results. Image segmentation [11] is the process of partitioning the pixels of an image into multiple segments. Each segment represents one object class. In our example, the most important objects are the person in the profile picture and the background. Figure 2 and 3 show the segmentation maps for two samples of the celebrities in the LFW dataset. The segmentation maps were produced by a state-of-the-art semantic segmentation model called DeepLabv3+ [12]. We used the implementation and model weights from the official TensorFlow repository [12].

In figures 2 and 3 we can see that the segmentation maps of traditional anonymization methods are clearly degraded and some of them are completely wrong. Deep Natural Anonymization, however, preserves the semantic segmentation. The segmentation maps are almost identical compared to the original. From figure 3 we can see that faces images processed by conventional anonymization methods not only produce bad segmentation boundaries but also make the segmentation model infer completely new object classes that were never present in the original image, like cats or bottles.

Fig.3 : Reese witherspoon DeepLabv3+ segmentation. First row shows the input images to the model and the second row the segmentation map overlayed onto the input image.

To quantify the impact of each anonymization technique, we calculated the mean of the intersection over union (mIOU) across the whole test set [13]. The calculation was done between the segmentation maps of the images generated by the different methods and the original ones. The results are reported in table 1.

Table 1: Semantic segmentation consistency measured with mIOU.Higher is better.

Content consistency

To asses the general content consistency between the anonymized images and the original ones, we used an independent image tagging model from Clarifai [14]. “The generic image tagging model recognizes over 11,000 different concepts including objects, themes, moods, and more […]“. The tags describe what the model infers from the input image. Additionally, the model gives confidence for each tag. Figure 4 shows the top 5 concepts, predicted by Clarifai’s public image tagging model [15], of the original and its DNAT version.

Fig.4: Reese Witherspoon top 5 concepts from clarifai. Left: Original. Right DNA (ours).

Ideally, a generic image tagging model should predict the exact same concepts for both the original and the anonymized image. To measure the consistency, we used Clarifai’s solution to predict the concepts in all our test samples for each different anonymization technique. Afterwards, we calculated the mean average precision (mAP) of the top N predicted concepts (where N stands for the amount of different concepts) between the anonymized images and the original ones. With the mAP we evaluate two things: the concurrencies of the predicted concepts and their associated scores. As an example, consider an anonymized image and its original pair that have been processed by the image tagging model. A concept with a lower confidence value in the anonymized image with respect to its original pair will have less impact on the final mAP score than a concept that occurs only in the anonymized image, and not in its original pair. The results for the top 5 and top 50 concepts are listed in table 2.

Table 2: Image concept consistency measured with mAP.Higher is better.